Face tracking

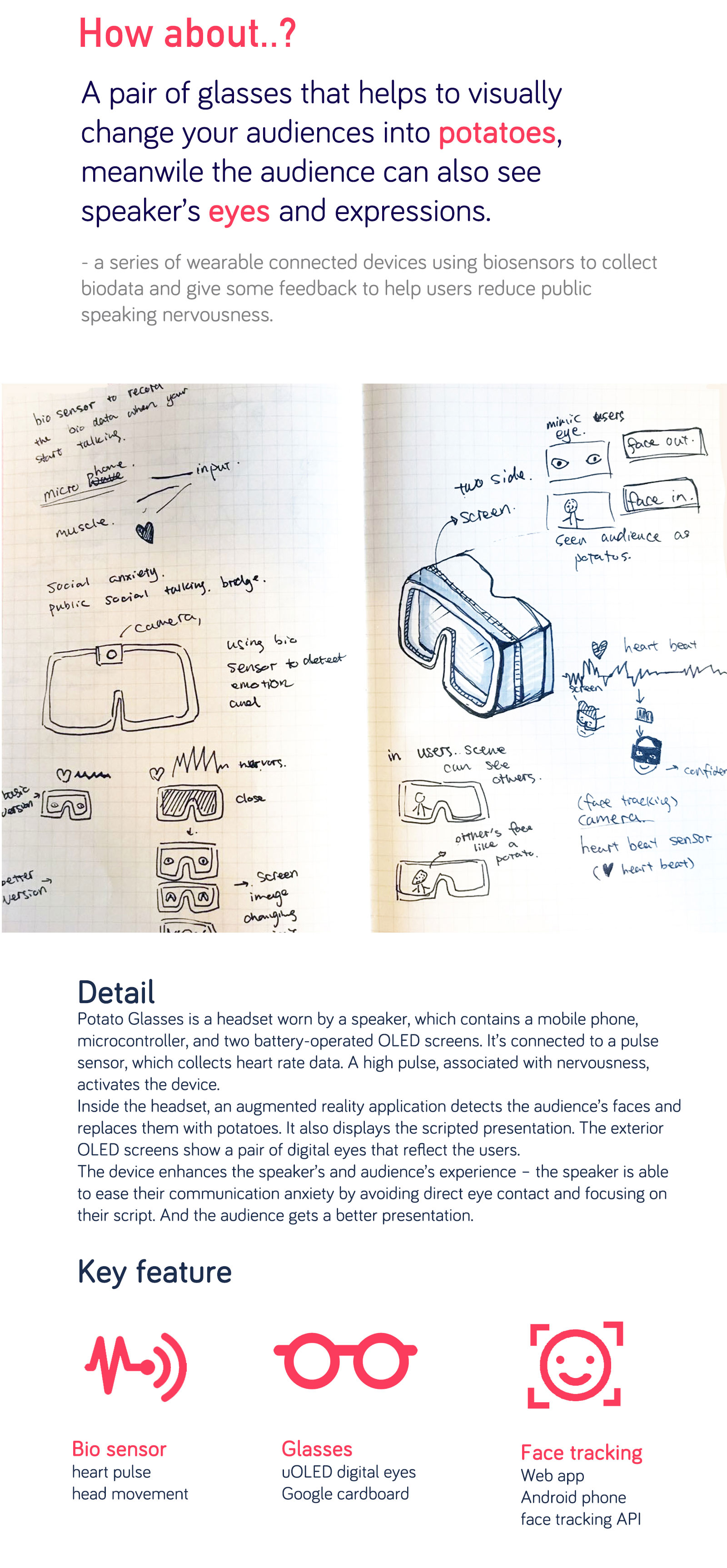

I Play with several face tracking library, hope the application is able to replace audience face as bunch of potatoes

At first, I use image to test the effect I want. I use javascript to write a web application to track the face on the image, and replace the face to the potato.

Then, I use webcam to capture the video, and replace face to potato in real time.

I tried different face tracking library to do the face tracking in real time. However, none of them works well on the phone. Since it should run on the phone, the library need to be lighter and the application should run fast. I know there's some way to make the application run faster like developing a native app or using openFramework, but the reason I choose web application is I think web application has more potential in the future. It will be cool if the user can share what they see on the website or connect the face tracking app with their online presentation pages and display the scripted presentation in the app.

Then I use JSFeat.js Library to track multiple face in real time. I try to grab the data from web camera, filter it and display it back using HTML5 and JavaScript. And then using Three.js to build the stereoscopic vision effect in order to create an Augmented Reality experience for Google Cardboard.

I also wrapped app in the PhoneGap to make it run faster and more stable.

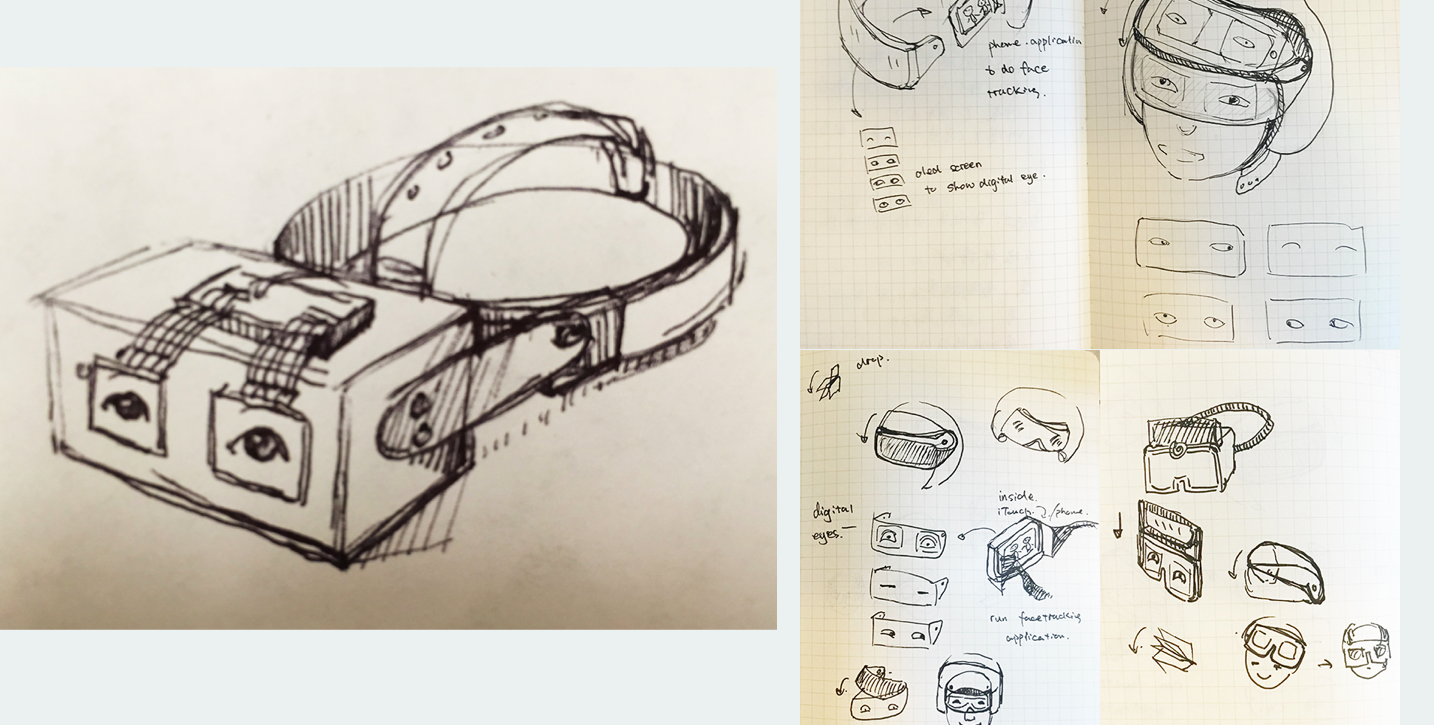

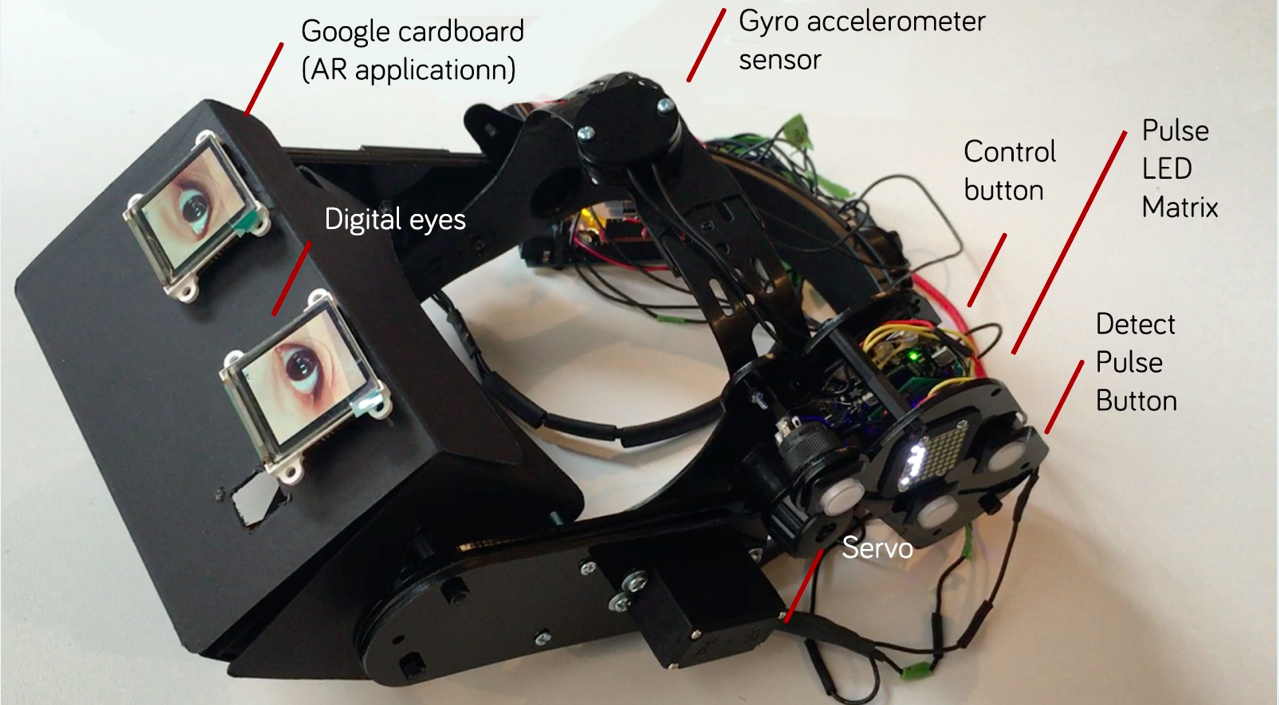

Digital eyes

I used two screen in front my headgear to mimic speaker's eyes. In this way, I want to create a kind of natural communication between the audience and the speaker. Eye contacting is so important in the presentation, although the nervous speaker don't won't to see audience's serious face and eyes. I want to use digital eye to represent speaker's emotion and expression.

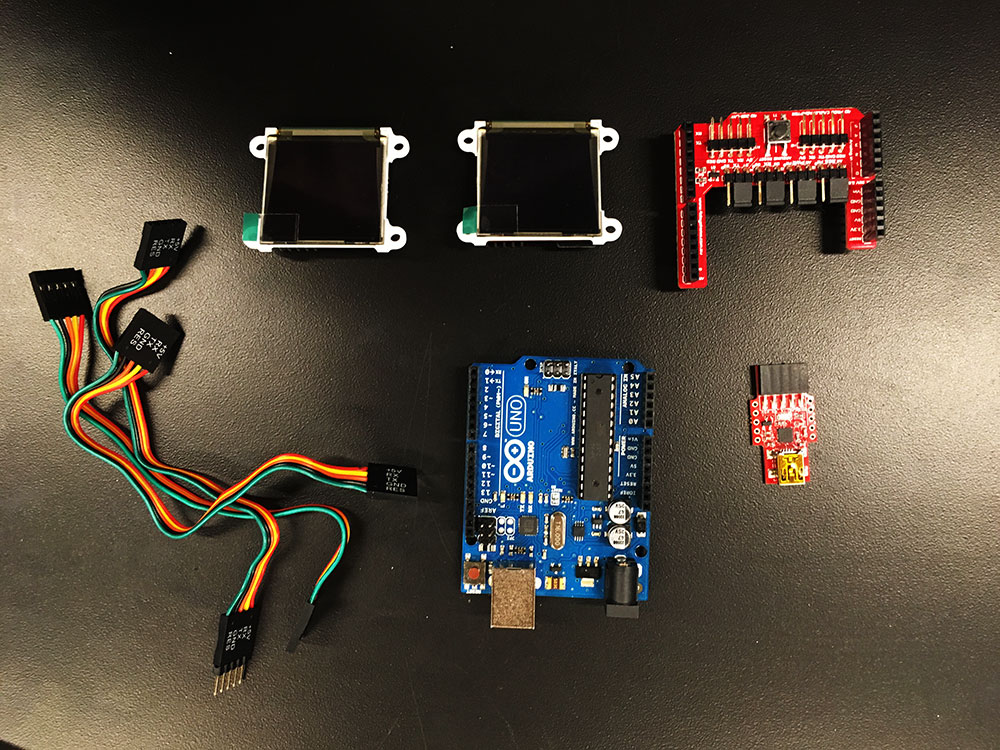

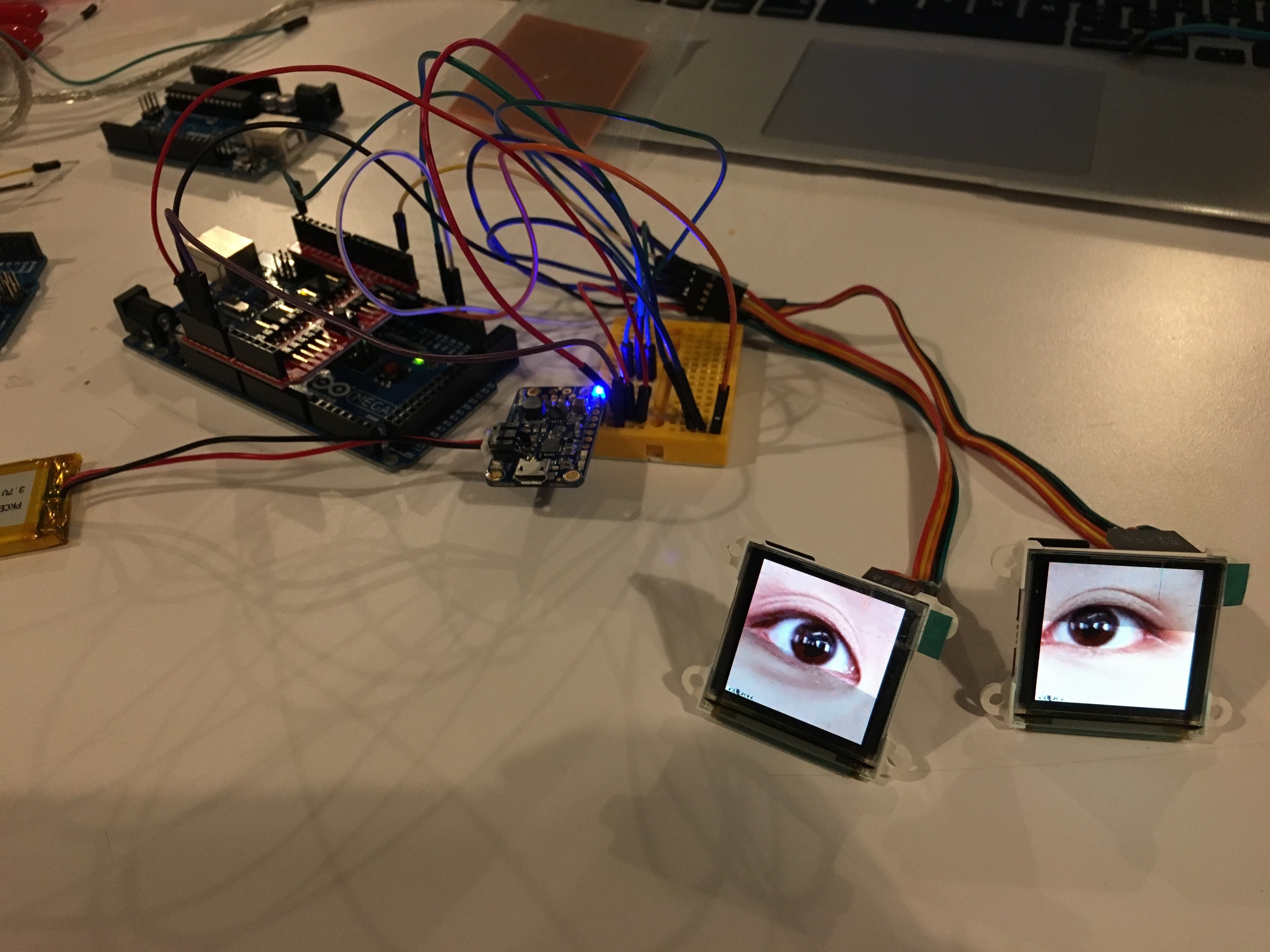

After doing some research, I found the processing power of the Arduino is insufficient to play a video, so at first I use codes to run animation on the Arduino.

But I found the digital eye is too robotic, I hope the eyes can be more like human beings' eye, which can make audience thinking the speaker is closer to them. Fortunately I found 4D system uOLED screen which is powerful to run video on itself. So I shoot my eyes using camera, and then edit them in AfterEffect to create a serials clips which able to represent different kind of eye movement.

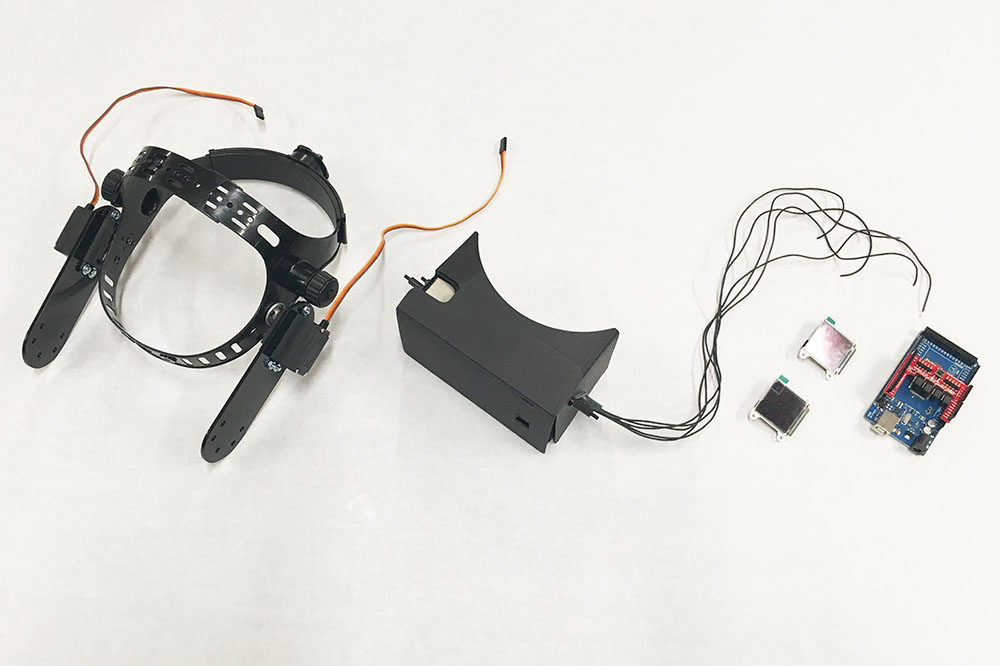

I add a Gyro Accelerometer on the top of my headset, in order to get users' head movement, then I make the digital eyes change based on user's head movement, in this way, to mimic a kind of natural eye contact between the user and his audience.

Headgear

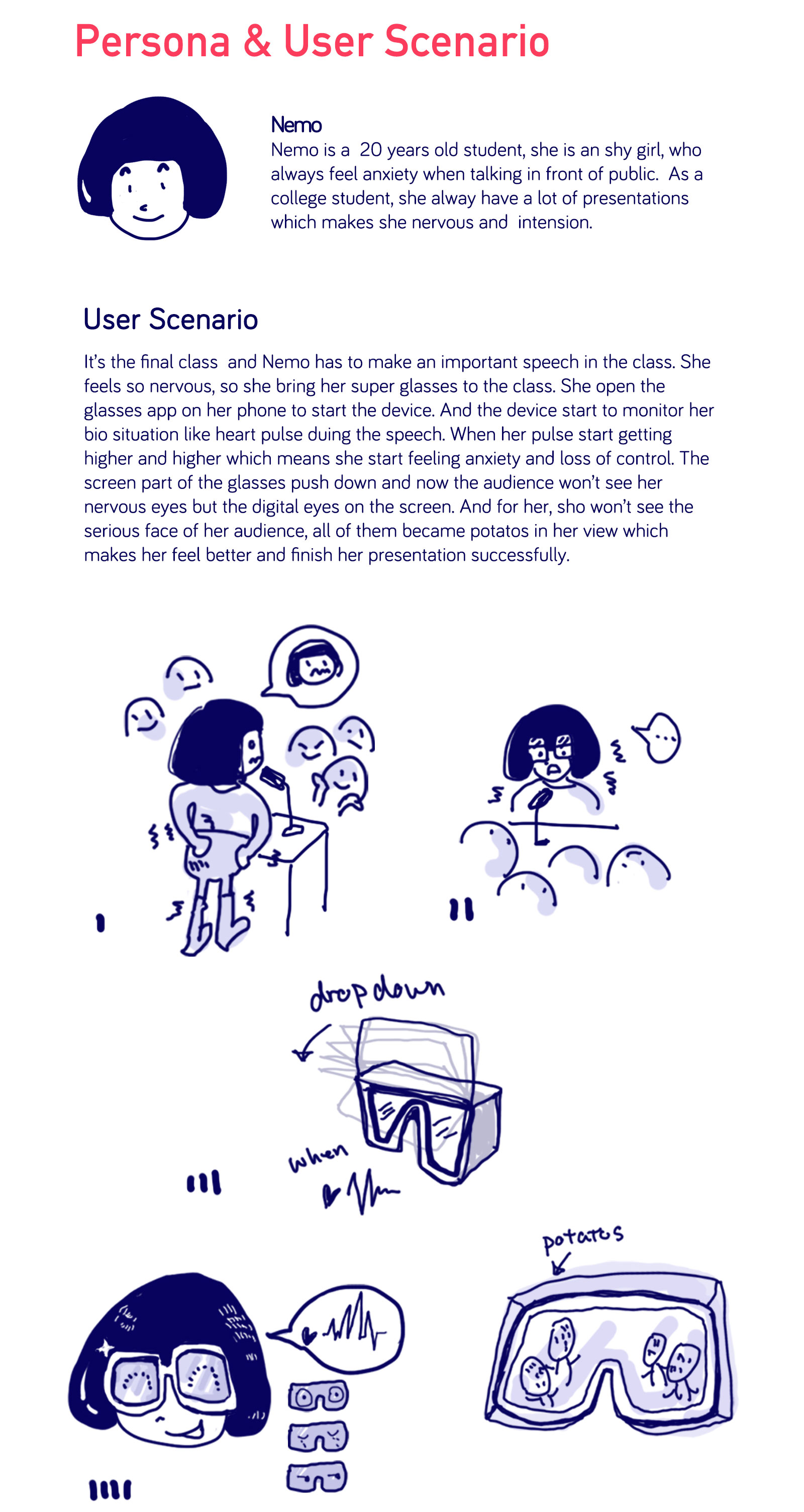

Then I start to build my headgear, making the glasses triggered by user's heart pulse. So I use Flora, polar pulse sensor, servo to build this mechanism headgear. The polar pulse sensor get the user's pulse data, when it detect the pulse is higher than 80 which usually associated with nervous, the glasses will be triggered and move down to cover the eyes. The audience will see the digital eyes of the speaker, meanwhile, the speaker can see his audience as potatoes in google cardboard.